Published by Jeremy. Last Updated on August 5, 2024.

Disclaimer: This Week in Blogging uses demographic data, email opt-ins, and affiliate links to operate this site. Please review our Terms and Conditions and Privacy Policy.

Would you like to save this article?

We're going to ask you to put your conspiracy theory hat on for a moment because we wanted to dive into a controversial theory that may be a sign of the future of the internet.

Enter the Dead Internet Theory, an interesting conspiracy theory that may actually be on to something.

So grab your tin foil hat, and let's go down the rabbit hole.

- Note: As with all conspiracy theories, do not take this one too seriously.

What is the Dead Internet Theory?

The Dead Internet Theory is a conspiracy theory gaining in popularity that the internet is now primarily built on a majority of the following:

- Bot activity

- Auto-generated content (such as AI), and

- Is algorithmically controlled to manipulate people

Essentially, instead of being an open source of information for humans by humans, the internet is turning into a place where bots and algorithms reign supreme. The internet is becoming “dead” because the vast majority of content that is being created (and how it is being served) is by non-humans. This could be for political purposes (e.g., spreading misinformation), commercial (e.g., getting you to see more ads or buy a product), or some other factor entirely.

Now, we will say that the more you read about the Dead Internet Theory, the more it goes off the rails. Think along the lines of mass government conspiracies, companies paying influencers to be on the take and keep up the illusion, and the like. Yes, we all know that social networks pay creators for reach, but it is a pittance, and they're certainly not telling anyone what to post in the slightest.

But there is an element of the Dead Internet Theory that we find fascinating, and that is the conversation of just how much bots and AI control the internet. These exist, they are a problem, and they're getting worse. Social networks are not only encouraging people create content with AI, but they are rewarding it, too.

As such, there is a compelling notion within all this that the internet is actually dying because so much of it is fake.

So let's dive into some of the biggest issues facing the internet right now and think about them in the realm of the Dead Internet Theory!

Social Media Switches to Discovery Mode

When most social networks were founded in the early 2000s and 2010s, they almost always had the same premise- stay connected with friends and businesses you like.

The primary driving force behind all of these networks was the ability to connect with entities that reflect your interests. Like our blog? Follow our channels to see more of our content! It was pretty straightforward and worked.

It wasn't until apps like TikTok came on the scene (2016) and exploded in popularity in the early 2020s that the format of social media began to shift. This was primarily because newer networks prioritized a Discovery algorithm over a follower-based one. Here, the algorithms evolved well enough to predict what you may like based on your activity, as opposed to what you expressly opted in to see. These were so remarkably well done that most major networks began to copy the style immediately.

Why is this a problem? First, Discovery feeds seem to pick winners through arbitrary means outside of anyone's control. Slow down on a post for a fraction of a second too long, and you have a new feed of recommended content without even a click. Second, with more Discovery content being shown in the feed, visibility is reduced for accounts that users have explicitly opted in to see.

For this one, we want to focus on the first point more than the second, purely because the Dead Internet Theory focuses on content outside of organic human activity. If Discovery content is served to you without a clear opt-in, well, that level of algorithmic activity is what the Dead Internet Theory is referring to. Who created that content? Do they own the rights? Is it real or AI? How did I get selected to see it? In many cases, no one knows, and that's a problem.

To showcase where this becomes a big issue, it is best to dive into an example of a weird scenario I had come across on my own Discovery feed.

What Are We Discovering, Exactly?

Note: I'm being vague on which network this was on purpose. I am not sharing photos of the post in particular because I do not know all the details behind the scenes and do not want to violate anyone's copyrights if one of the posts is indeed an original. That said, I have screenshots saved for everything discussed below.

One day, I was scrolling through a social media feed as normal, and I saw a post on a topic I am interested in but from a source I am not a follower of (i.e. a Discovery-style recommendation). This happens often, so I didn't think much about it, moved on to subsequent content on the app I actually want to see, and let that be that.

I only noticed it because the post shared “facts” about the topic, with one of the first items being blatantly incorrect. It also featured a photo of a person who I believe is fairly popular within that sphere, but not the person who posted the photo. It was weird, but I moved on because a lot of Discovery content is, well, terrible, so none of this was shocking.

A few days later, I saw another post that gave me a moment of deja vu. I was pretty certain it was the same post, but in a different community, posted by someone with a different username, and with a different featured image. This jumped out to me fairly quickly because of that same false information showing up as previously mentioned (it had to do with a “Today is National [Whatever] Day”- none of those were even remotely close to the real one which was weeks earlier).

Upon copying the text and pasting it into the search bar of said network, I found no fewer than five instances of the same exact copy being shared by five different people, with four unique images (one repeat), several of which included the same person (none the original poster themselves), none on the right day, and, the kicker, they all had anywhere from 300 to 7,000+ reactions per post. Whatever it was, this post was seen by a lot of people.

The person in each image (when visible) was the same, but there was no conventional brand attached to make it seem like it was self-promotion. So we're left with either people copying and pasting content with different images (i.e. copyright infringement- a big issue on social networks already), a coordinated posting spree by bots or fake accounts to game the algorithms (possibly around the images), or something else I am not thinking of entirely.

Personally, I don't really care about what scam of the day is going on in groups/outlets/pages that are not my own. That is really none of my business. But what bothers me is this is what is now being displayed in a Suggested feed targeting me when I never opted-in to begin with- especially considering these are duplicate posts of questionable origin as noted above.

So, is this a coordinated attempt at self-promotion or algorithm gaming from a creator? Copyright infringement from people reposting content they shouldn't be? Bots? Bad AI? I can't say for sure (AI checking tools on the latter said no for that one, for the record). But what I do know is that algorithms are promoting this content instead of punishing it when something is clearly off, and that is a problem for everyone else who is an ethical content creator simply trying to have their real content seen by real people.

If the Dead Internet Theory has any legs to it, we're simply going to be seeing more of this in the future.

Search Engines Are Not Curating the Internet, They're Becoming It

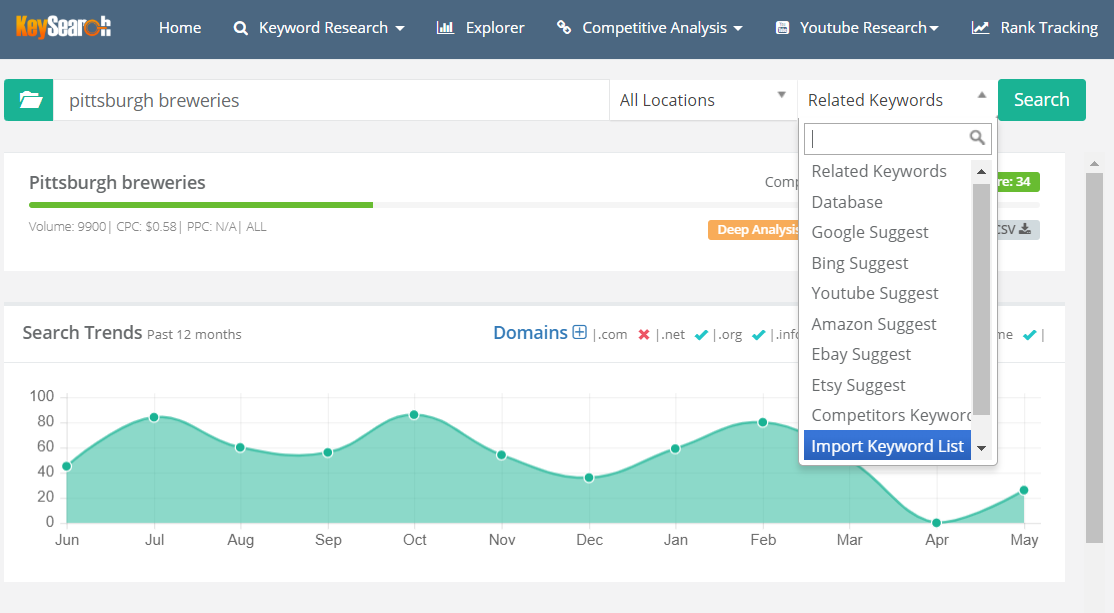

For many years, one of my main talking points at conferences, particularly around the topic of SEO, is “the internet is filling up fast”. A quick summary here is that more and more people are creating content, expertise in all niches is rising at a rapid pace, websites are gaining more and more authority, and it is becoming harder and harder to stand out and rank in search.

While there is always room for new things, the internet is packed with virtually everything- almost all knowledge from the entirety of human existence, personal experiences across an array of spectrums, and so much more. So while standing out on social may be getting a slight boost from Discovery feeds (one very real benefit, we admit), becoming visible in search engines is more difficult than ever.

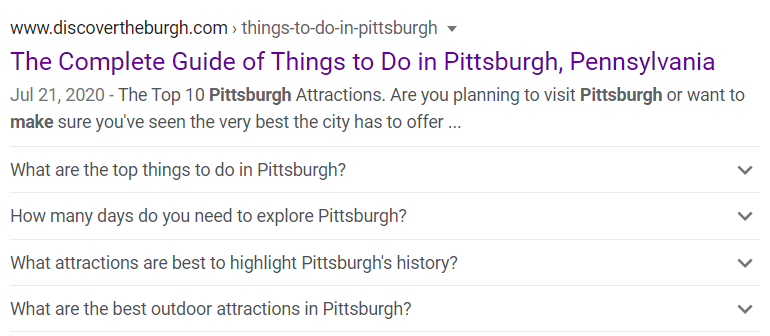

One of the big complaints about the push for AI search results and LLMs is that they are taking the sum total of all human knowledge and experiences and repurposing it to their advantage. Instead of curating the internet to provide better search results linking to the original creator, they are adapting it to own the space themselves—often while removing the creator (you know, a real human) from the process entirely.

This leads into the Dead Internet Theory as well because a single entity (in this case, an algorithm or large language model owned by a company) is determining what content to deliver, making determinations on what is truth (a tricky subject especially with experiential/opinion based topics), and often doing so with hilarious or potentially dangerous results.

Of course, we will be the first to admit that the entire field of SEO was designed to crack into ranking in search algorithms from the creator side (which is a form of manipulation). The issues with SEO are well known, and, quite frankly, also a problem. But it is a stark difference from AI Overviews and LLMs for a few specific reasons- there are millions of creators competing for positions in search, and results are aggregated to give users a chance to decide what they want to consume.

When an LLM/AI model decides a limited result for you through unknown mechanisms, the Dead Internet Theory rears its ugly head once again.

Sure, we'll probably get to a future where AI modeling can be optimized and gamed via a whole new branch of SEO. But for now, and especially with a lack of citations and apparent competitiveness, we are left with only one possible conclusion- search engines are becoming the internet, and a program is in control of what you see more than ever.

What Happens When Humans Become Irrelevant?

Finally, the one issue with the Dead Internet Theory that I think could be expanded upon more is that algorithms could be getting to the point where human activity on any respective outlet becomes irrelevant (put your tinfoil hat on now).

We could discuss how the Discovery feeds promote bad content and reduce reach to true followers until we're blue in the face. We could also discuss how AI-created content is making the internet truly fake. But for this take, I want to discuss the bots that are likely plaguing the networks in particular.

Bots often use real accounts with a fake persona to generate mass engagement (see click farms). We often focus on the latter part of bots as being the problem on social media (e.g. fake engagement), but for my own personal take on the Dead Internet Theory, I want to talk about the former- they're still likely very real accounts used for nefarious purposes.

Yes, these bots can throw engagement metrics, but another interesting thought is this- do they also see ads? Unless I'm unaware of code that allows a bot to immediately engage on a post without using a particular app, these accounts likely have to click, scroll, and navigate social networks to some degree in a similar fashion that users do- albeit much, much faster.

How many of these trigger an ad load from a real advertiser?

Personally, my own contribution to the Dead Internet Theory, and where that tinfoil hat comes in handy, would be that networks are not cracking down on bot activity hard enough purely because they could be bringing in incredible revenue. Furthermore, we may even get to a point in the future where these networks are so full of bots that human engagement becomes unnecessary- the bots may be profitable enough.

This will likely be when the Dead Internet Theory truly comes to pass. Fake content, engaged with by fake profiles, while consuming paid ads from very real advertisers. Who needs humans?

Are we there yet? Probably not. Are we headed that way? Well, as they say, it is only a theory.

Ultimately, while I do not think we are quite at the Dead Internet Theory stage just yet, there is some truth to the conspiracy theory all the same. Algorithms are moving more into Discovery, with a program deciding what you see without an opt-in more and more. AI/LLMs are removing the competitive and human-to-human nature of the internet in an instant. Bot activity is clearly a problem now more than ever with no visible end in sight.

It certainly does make you wonder how much of user popularity is real, what content is real and what is fake, how much is manipulated by bots, and, perhaps more importantly, who is controlling it? When the answer to all of this becomes “not a human,” the Dead Internet Theory may truly come to pass.

Join This Week in Blogging Today

Join This Week in Blogging to receive our newsletter with blogging news, expert tips and advice, product reviews, giveaways, and more. New editions each Tuesday!

Can't wait til Tuesday? Check out our Latest Edition here!

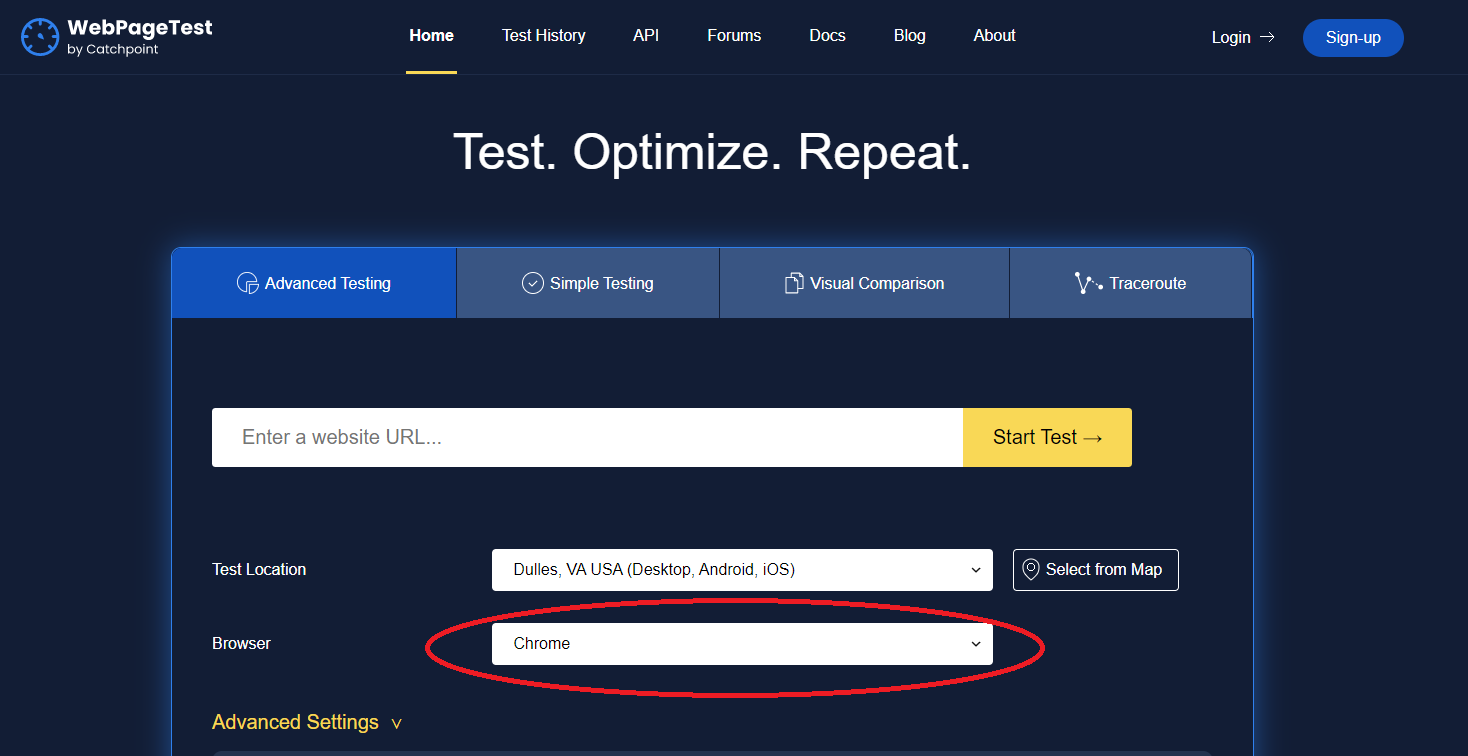

Upgrade Your Blog to Improve Performance

Check out more of our favorite blogging products and services we use to run our sites at the previous link!

How to Build a Better Blog

Looking for advice on how to improve your blog? We've got a number of articles around site optimization, SEO, and more that you may find valuable. Check out some of the following!